Large Language Fashions (LLMs) have made vital advances within the discipline of Information Extraction (IE). Information extraction is a job in Pure Language Processing (NLP) that entails figuring out and extracting particular items of knowledge from textual content. LLMs have demonstrated nice ends in IE, particularly when mixed with instruction tuning. By way of instruction tuning, these fashions are skilled to annotate textual content in accordance with predetermined requirements, which improves their means to generalize to new datasets. This means that even with unknown knowledge, individuals are in a position to do IE duties efficiently by following directions.

Nevertheless, even with these enhancements, LLMs nonetheless face many difficulties when working with low-resource languages. These languages lack each the unlabeled textual content required for pre-training and the labeled knowledge required for fine-tuning fashions. Because of this lack of knowledge, it’s difficult for LLMs to realize good efficiency in these languages.

To overcome this, a workforce of researchers from the Georgia Institute of Expertise has launched the TransFusion framework. In TransFusion, fashions are adjusted to operate with knowledge translated from low-resource languages into English. With this technique, the unique low-resource language textual content and its English translation present data that the fashions could use to create extra correct predictions.

This framework goals to successfully improve IE in low-resource languages by using exterior Machine Translation (MT) programs. There are three main steps concerned, that are as follows:

- Translation throughout Inference: Changing low-resource language knowledge into English in order that a high-resource mannequin can annotate it.

- Fusion of Annotated Knowledge: In a mannequin skilled to make use of each forms of knowledge, fusing the unique low-resource language textual content with the annotated English translations.

- Setting up a TransFusion Reasoning Chain, which integrates each annotation and fusion into a single autoregressive decoding go.

Increasing upon this construction, the workforce has additionally launched GoLLIE-TF, which is an instruction-tuned LLM that’s cross-lingual and tailor-made particularly for Web Explorer duties. GoLLIE-TF goals to cut back the efficiency disparity between high- and low-resource languages. The mixed purpose of the TransFusion framework and GoLLIE-TF is to extend LLMs’ effectivity when dealing with low-resource languages.

Experiments on twelve multilingual IE datasets, with a whole of fifty languages, have proven that GoLLIE-TF works effectively. Compared to the fundamental mannequin, the outcomes display that GoLLIE-TF performs higher zero-shot cross-lingual switch. Because of this with out additional coaching knowledge, it will possibly extra successfully apply its acquired abilities to new languages.

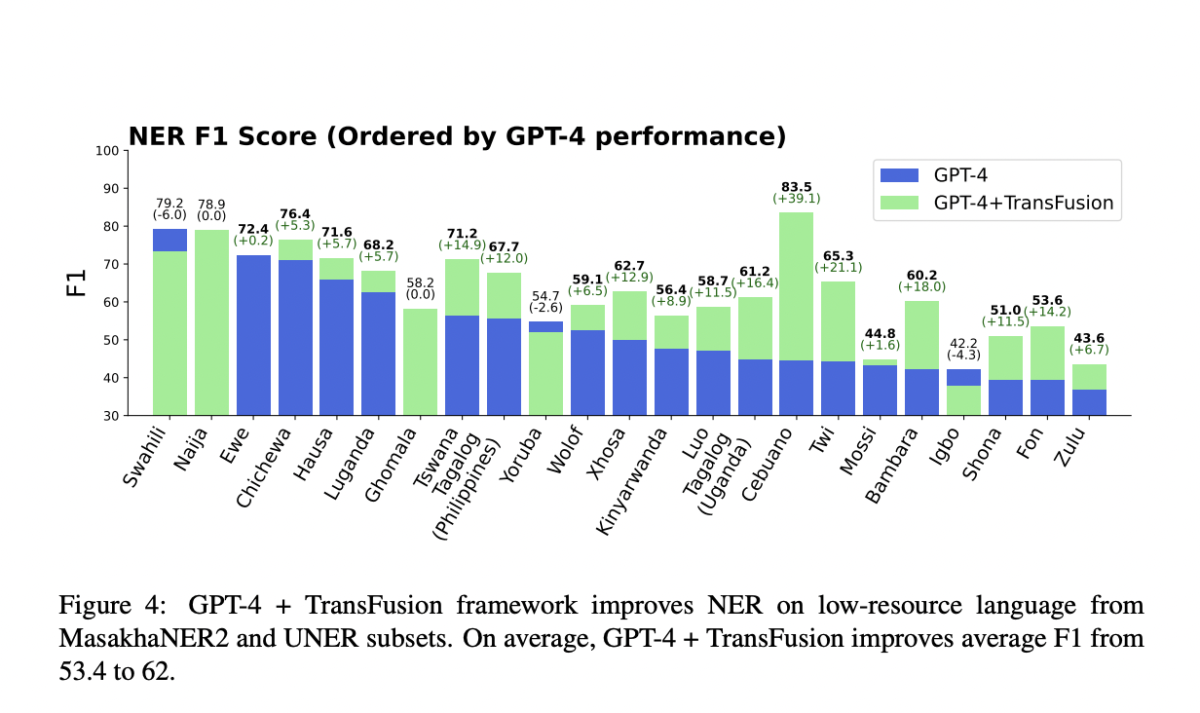

TransFusion utilized to proprietary fashions reminiscent of GPT-4 significantly improves the efficiency of low-resource language named entity recognition (NER). When prompting was used, GPT-4’s efficiency elevated by 5 F1 factors. Additional enhancements have been obtained by fine-tuning varied language mannequin varieties utilizing the TransFusion framework; decoder-only architectures improved by 14 F1 factors, whereas encoder-only designs improved by 13 F1 factors.

In conclusion, TransFusion and GoLLIE-TF collectively present a potent resolution for enhancing IE duties in low-resource languages. This reveals notable enhancements throughout many fashions and datasets, serving to to cut back the efficiency hole between high-resource and low-resource languages by using English translations and fine-tuning fashions to fuse annotations.

Try the Paper. All credit score for this analysis goes to the researchers of this undertaking. Additionally, don’t neglect to observe us on Twitter.

Be part of our Telegram Channel and LinkedIn Group.

In case you like our work, you’ll love our newsletter..

Don’t Neglect to affix our 45k+ ML SubReddit

Tanya Malhotra is a closing yr undergrad from the College of Petroleum & Vitality Research, Dehradun, pursuing BTech in Pc Science Engineering with a specialization in Artificial Intelligence and Machine Studying.

She is a Knowledge Science fanatic with good analytical and significant considering, together with an ardent curiosity in buying new abilities, main teams, and managing work in an organized method.