The College of Minnesota researchers have launched a {hardware} innovation known as CRAM, lowering AI vitality use by as much as 2,500 instances by processing information inside reminiscence, promising vital developments in AI effectivity.

This system might slash synthetic intelligence vitality consumption by at the very least 1,000 instances.

Researchers in engineering on the University of Minnesota Twin Cities have developed a complicated {hardware} system that might lower vitality use in synthetic intelligence (AI) computing purposes by at the very least an element of 1,000.

The analysis is revealed in npj Unconventional Computing, a peer-reviewed scientific journal revealed by Nature. The researchers have a number of patents on the expertise used within the system.

With the rising demand of AI purposes, researchers have been taking a look at methods to create a extra energy-efficient course of, whereas protecting efficiency excessive and prices low. Generally, machine or synthetic intelligence processes switch information between each logic (the place info is processed inside a system) and reminiscence (the place the information is saved), consuming a considerable amount of energy and vitality.

Introduction of CRAM Expertise

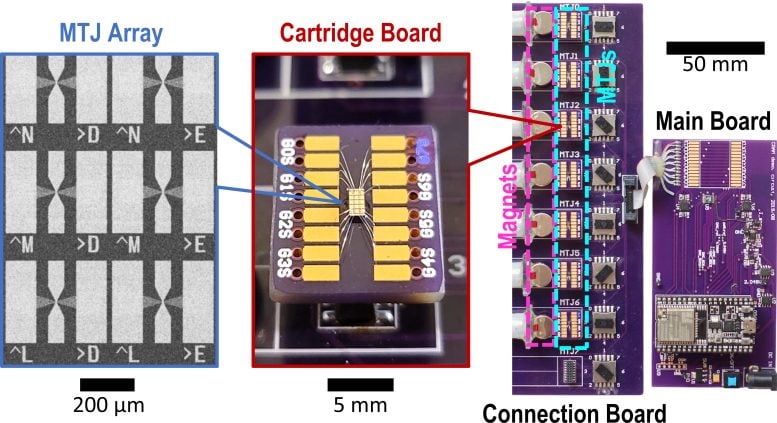

A crew of researchers on the College of Minnesota Faculty of Science and Engineering demonstrated a brand new mannequin the place the information by no means leaves the reminiscence, known as computational random-access reminiscence (CRAM).

“This work is the primary experimental demonstration of CRAM, the place the information will be processed solely inside the reminiscence array with out the necessity to go away the grid the place a pc shops info,” stated Yang Lv, a College of Minnesota Division of Electrical and Pc Engineering postdoctoral researcher and first creator of the paper.

A custom-built {hardware} system plans to assist synthetic intelligence be extra vitality environment friendly. Credit score: College of Minnesota Twin Cities

The Worldwide Energy Company (IEA) issued a global energy use forecast in March of 2024, forecasting that vitality consumption for AI is prone to double from 460 terawatt-hours (TWh) in 2022 to 1,000 TWh in 2026. That is roughly equal to the electrical energy consumption of the complete nation of Japan.

Based on the brand new paper’s authors, a CRAM-based machine studying inference accelerator is estimated to realize an enchancment on the order of 1,000. One other instance confirmed an vitality financial savings of two,500 and 1,700 instances in comparison with conventional strategies.

Evolution of the Analysis

This analysis has been greater than twenty years within the making,

“Our preliminary idea to make use of reminiscence cells straight for computing 20 years in the past was thought of loopy,” stated Jian-Ping Wang, the senior creator on the paper and a Distinguished McKnight Professor and Robert F. Hartmann Chair within the Division of Electrical and Pc Engineering on the College of Minnesota.

“With an evolving group of scholars since 2003 and a really interdisciplinary school crew constructed on the College of Minnesota—from physics, supplies science and engineering, laptop science and engineering, to modeling and benchmarking, and {hardware} creation—we have been in a position to receive constructive outcomes and now have demonstrated that this sort of expertise is possible and is able to be integrated into expertise,” Wang stated.

This analysis is a part of a coherent and long-standing effort constructing upon Wang’s and his collaborators’ groundbreaking, patented analysis into Magnetic Tunnel Junctions (MTJs) units, that are nanostructured units used to enhance exhausting drives, sensors, and different microelectronics programs, together with Magnetic Random Entry Reminiscence (MRAM), which has been utilized in embedded programs similar to microcontrollers and smartwatches.

The CRAM structure permits the true computation in and by reminiscence and breaks down the wall between the computation and reminiscence because the bottleneck in conventional von Neumann structure, a theoretical design for a saved program laptop that serves as the premise for nearly all fashionable computer systems.

“As a particularly energy-efficient digital-based in-memory computing substrate, CRAM could be very versatile in that computation will be carried out in any location within the reminiscence array. Accordingly, we will reconfigure CRAM to finest match the efficiency wants of a various set of AI algorithms,” stated Ulya Karpuzcu, an professional on computing structure, co-author on the paper, and Affiliate Professor within the Division of Electrical and Pc Engineering on the College of Minnesota. “It’s extra energy-efficient than conventional constructing blocks for at this time’s AI programs.”

CRAM performs computations straight inside reminiscence cells, using the array construction effectively, which eliminates the necessity for sluggish and energy-intensive information transfers, Karpuzcu defined.

Essentially the most environment friendly short-term random entry reminiscence, or RAM, system makes use of 4 or 5 transistors to code a one or a zero however one MTJ, a spintronic system, can carry out the identical perform at a fraction of the vitality, with increased velocity, and is resilient to harsh environments. Spintronic units leverage the spin of electrons somewhat than {the electrical} cost to retailer information, offering a extra environment friendly different to conventional transistor-based chips.

At present, the crew has been planning to work with semiconductor trade leaders, together with these in Minnesota, to supply large-scale demonstrations and produce the {hardware} to advance AI performance.

Reference: “Experimental demonstration of magnetic tunnel junction-based computational random-access reminiscence” by Yang Lv, Brandon R. Zink, Robert P. Bloom, Hüsrev Cılasun, Pravin Khanal, Salonik Resch, Zamshed Chowdhury, Ali Habiboglu, Weigang Wang, Sachin S. Sapatnekar, Ulya Karpuzcu and Jian-Ping Wang, 25 July 2024, npj Unconventional Computing.

DOI: 10.1038/s44335-024-00003-3

Along with Lv, Wang, and Karpuzcu, the crew included College of Minnesota Division of Electrical and Pc Engineering researchers Robert Bloom and Husrev Cilasun; Distinguished McKnight Professor and Robert and Marjorie Henle Chair Sachin Sapatnekar; and former postdoctoral researchers Brandon Zink, Zamshed Chowdhury, and Salonik Resch; together with researchers from Arizona College: Pravin Khanal, Ali Habiboglu, and Professor Weigang Wang

This work was supported by grants from the U.S. Protection Advanced Analysis Initiatives Company (DARPA), the Nationwide Institute of Requirements and Expertise (NIST), the Nationwide Science Basis (NSF), and Cisco Inc. Analysis together with nanodevice patterning was performed in collaboration with the Minnesota Nano Middle and simulation/calculation work was completed with the Minnesota Supercomputing Institute on the College of Minnesota.