This analysis originated to develop a novel AI-based drought index for drought evaluation and monitoring based on numerous machine studying algorithms. The methodological framework for growing the drought index is illustrated in Fig. 2.

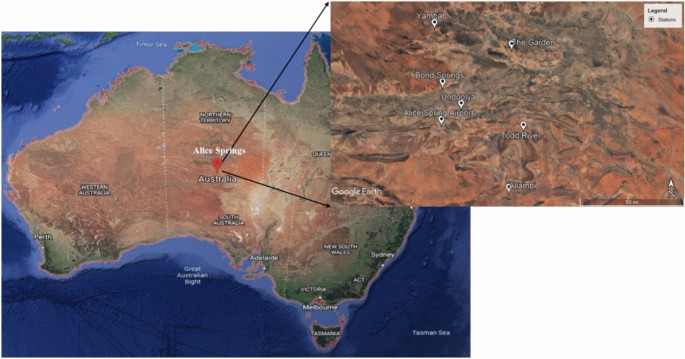

A set of climatic data (most temperature, precipitation, and potential evapotranspiration (PET) data), together with a number of drought indicators: (1) DI1: deep soil moisture, (2) DI2: decrease soil moisture, (3) DI3: root zone soil moisture, (4) DI4: higher soil moisture, and (5) DI5: runoff, had been collected from the seven meteorological stations as inputs for the AI models. The data high quality verify was carried out to take away errors, duplications, and/or inconsistencies. Furthermore, lacking data entries had been imputed using linear interpolation. Notably, most temperature data had been sourced from 4 stations located across the research space (Alice Springs Airport, Curtin Springs, Kulgera, and Jervois station). In distinction, precipitation data had been acquired from the seven talked about stations. To reconcile this, temperature datasets from the 4 stations had been reworked to match all seven stations using the inverse distance weighting (IDW) methodology. Moreover, I3 was computed using the Thornthwaite methodology on account of its minimal data requirement, together with common temperature and latitude25.

The next procedures had been utilized to calculate drought indices using standard strategies with the R, Dmap, and Drinc software program. Following the standard strategies for estimating the indices, a Pearson correlation evaluation was used to match the outcomes of the standard models with the drought indicators. The correlation between the outputs from the drought indicators and standard models was utilized to develop and validate AI-based drought evaluation indices using totally different machine studying algorithms.

Standard drought indices

The current research goals to judge the drought severity and period by means of a number of standard drought indices, obtained from the Alice Springs meteorological stations. The standard meteorological drought indices utilized on this analysis included the SPI, SPEI, PDSI, % of regular index (PNI), China-Z index (CZI), modified China-Z index (MCZI), rainfall anomaly index (RAI), Z rating index (ZSI), and RDI. Generally, the vary of every index varies considerably, through which destructive and optimistic values denote dry and moist situations, respectively; apart from PNI, which has a spread from 40 to 120. Details about the idea and ranges of every drought index will be discovered within the supplementary information.

Standardized precipitation index

The SPI was first developed in 1992 based on drought frequency, period, and timescale. By way of computation, the mannequin depends on a single parameter, i.e., precipitation. This index has a number of benefits, together with its flexibility to be calculated over a spread of timeframes and its spatial consistency. Such a operate permits for comparisons amongst numerous places beneath totally different climate situations. The SPI was calculated using estimated precipitation possibilities for a given timescale and then transformed into an index. For any chosen location, the SPI was calculated using long-term precipitation data. The obtained data had been reworked from a chance distribution and fitted to a standard distribution mannequin. The SPI was calculated using the next chance density operate based on precipitation data fitted by gamma distribution ((gleft({x}proper))):

$$gleft({x}proper)= frac{1}{{beta }^{alpha }Gamma left(alpha proper)}{{x}}^{alpha -1}{e}^{-{x}/beta }: {x}>0; beta >0$$

(1)

the place β is the dimensions parameter, α is the form parameter, Γ(α) is the gamma operate, and x is the quantity of precipitation (mm).

Standardized precipitation evapotranspiration index

The SPEI was calculated based on the PET data using the Thornthwaite methodology, because it requires minimal data (i.e., common temperature and latitude) in comparison with different strategies25. The computations for the PET and SPEI will be discovered within the supplementary information.

Palmer drought severity index

The PDSI is a technique of evaluating moisture situations in several places and between totally different months based on standardized measurements of moisture situations. An evaluation of the PDSI is based on the precipitation, temperature, and soil properties. Based on the inputs, equivalent to evapotranspiration, soil recharge, runoff, and moisture loss from the floor layer, the water stability equation will be constructed. Furthermore, the PDSI represents the drought historical past spatially and temporally. Though the PDSI is designed for agriculture purposes, the mannequin doesn’t precisely replicate the results of extended droughts on hydrology. The PDSI was computed based on the methodology developed by26.

% of regular index

The PNI is used to categorise droughts and decide the severity of meteorological occasions. The PNI was discovered to be environment friendly in evaluating the drought situations for particular person seasons in addition to a selected area. Moreover, this index will be calculated on totally different time scales, equivalent to month-to-month, seasonal, and annual foundation. The PNI was calculated as follows:

$$PNI= frac{{P}_{i}}{P} x 100$$

(2)

the place Pi is the precipitation (mm) with time increment i and P is the conventional precipitation (mm) for the chosen time-frame.

China-Z index and modified China-Z index

As a substitute for SPI, the CZI was developed by the Nationwide Climate Middle of China in 1995 27. The CZI was calculated as follows:

$$textual content{CZIij}= frac{6}{Csi}occasions {left(frac{textual content{Csi}}{2}occasions varphi tj+1right)}^{1/3}-frac{6}{Csi}+frac{textual content{Csi}}{6}$$

(3)

the place CZIij stands for the corresponding CZI of the current month (J) for time interval i, i represents the time scale of curiosity, j is the current month, Csi is the skewness coefficient, and φtj is the standardized variation. Moreover, the MCZI was calculated by substituting the median precipitation for the imply precipitation in the identical equation.

Rainfall anomaly index

The RAI is a drought index that measures deviations of month-to-month or seasonal rainfall from the long-term common. The methodology entails calculating the standardized anomalies by evaluating noticed precipitation data towards historic imply values. Constructive and destructive anomalies are quantified, offering a sign of moist and dry durations, respectively. A mean of the ten highest values creates a threshold for the optimistic anomaly, and a median of the ten lowest values creates a threshold for the destructive anomaly. The thresholds had been calculated as follows:

$$RAI= pm 3times left[frac{(p-overline{p })}{overline{m }-overline{p} }right]$$

(4)

the place p is the annual precipitation (mm), p refers back to the long-term common precipitation (mm), and m is the imply of the ten highest/ lowest values of p for the optimistic/destructive anomalies.

Z rating index

The ZSI estimates the month-to-month moisture anomaly by assessing the deviation from regular moist situations in a given month. Regardless of the similarities between the ZSI and SPI, the ZSI doesn’t require becoming the precipitation data to gamma and Pearson kind III distributions. The next equation was used to calculate ZSI:

$$ZSI= frac{Pi-overline{P}}{SD }$$

(5)

the place P represents the typical month-to-month precipitation (mm), Pi is precipitation for a specified month (mm), and SD refers to the usual deviation of a time sequence (mm).

Reconnaissance drought index

The RDI is based on the measured precipitation and PET, the place it’s a correlation of RDI preliminary worth (αokay), standardized RDI (RDIst), and normalized RDI (RDIn). A composite cumulative distribution operate was used to calculate the RDI, which contains the chance of zero precipitation and the chance of gamma cumulative precipitation. The αokay was offered as an aggregated worth for every month, season, and 12 months, which is often calculated for the 12 months (i) and time foundation j ( in months) in accordance with the beneath equation:

$${{alpha }}_{okay}^{(i)}=frac{sum_{j=1}^{okay}{P}_{ij}}{sum_{j=1}^{okay}{PET}_{ij}}$$

(6)

the place Pij is the precipitation and PETij is the potential evapotranspiration of month j for the hydrological 12 months i. The RDIst was calculated by becoming the gamma chance density operate and the frequency distribution of αokay. Given the idea that αokay follows a lognormal distribution, RDIst was calculated as follows:

$${RDI}_{st}^{i}=frac{{y}^{(i)}-overline{y}}{{widehat{sigma } }_{y}}$$

(7)

the place ({y}^{(i)}) is the ln (({{alpha }}_{okay}^{(i)})), (overline{y }) is the arithmetic imply, and ({widehat{sigma }}_{y}) refers to the usual deviation. The RDIn was calculated as follows:

$${RDI}_{n}=frac{{{alpha }}_{okay}^{(i)}}{overline{{{alpha } }_{okay}}}-1$$

(8)

the place (overline{{{alpha } }_{okay}}) represents the typical of αokay values for the n years.

Gentle computing models

A number of AI models, equivalent to resolution tree (DT), generalized linear mannequin (GLM), help vector machine (SVM), artificial neural community (ANN), deep studying (DL), and random forest (RF), had been used on this research for drought evaluation. Hyperparameter tuning was carried out in RapidMiner using grid search to search out the optimum set of parameters for every mannequin. The tuned parameters had been ANN: alpha, hidden layer sizes, max iteration, variety of iterations, 2) RF: variety of estimators, most depth, most options, minimal samples leaf, and minimal samples cut up.

Choice bushes

DTs are environment friendly classification and regression white-box models that divide datasets into a number of subgroups. These models carry out prediction by studying resolution guidelines from the enter parameters. The bushes are branched by means of a recursive binary operation to reduce the sum of the squared deviations from the imply of the cut up components. They’re extremely competent in managing data with totally different scales and nonlinearity. Moreover, this mannequin is able to processing each discrete and steady data varieties, incorporating an imputation method the place lacking values are substituted with their imply or median values. Moreover, the mannequin is adept at figuring out probably the most related components for modeling with out the need for intensive hyperparameter tuning. Consequently, a DT was utilized within the present analysis. Extra info will be present in28.

Generalized linear mannequin

A GLM is a supervised machine studying algorithm that comes with linear and logistic regression, the place the data are fitted using the utmost probability method. GLM develops a linear relationship between the response and the predictors regardless of having a nonlinear main interplay mechanism. That is achieved by making use of a hyperlink operate to correlate the response with a linear mannequin. The error distribution of the response follows an exponential distribution, e.g., a Poisson, binomial, or gamma distribution, versus linear regression models. Moreover, GLMs are efficient in coping with models possessing a constrained variety of nonzero parameter predictors. GLM will be represented mathematically by means of the next equation:

$${Y}_{i}={gamma }_{0}+{gamma }_{1}{x}_{i1}+{gamma }_{2}{x}_{i2}+dots +{gamma }_{n}{x}_{in}$$

(9)

the place ({Y}_{i}) is the expected reliable variable, i.e., water consumption, i is the variety of months, ({gamma }_{0}) is a continuing mannequin parameter, ({gamma }_{i1}, {gamma }_{i2}dots {gamma }_{in}) and ({x}_{i1}, {x}_{i2}dots {x}_{in}) are the regression coefficients and impartial variables, respectively, for the ith statement having n options.

Assist vector machine

An SVM is an AI-based mannequin initiated by a statistical studying principle. This methodology gives satisfactory generalization on a constrained variety of studying patterns by adopting the inductive structural threat minimization precept. SVM makes use of a kernel operate to acquire knowledgeable information concerning the examined phenomenon to cut back the mannequin complexity, therefore growing its forecasting accuracy29. The kernel operate is taken into account a weighting operate employed for nonparametric predictions. It may be modeled in linear, quadratic, and cubic kinds. The mathematical kernel operate of an SVM will be demonstrated within the equation beneath:

$$okay(x,y)={left[1+(x,y)right]}^{p}$$

(10)

the place okay is the kernel operate and p is a parameter assigned by the consumer to find out the order of the operate.

Artificial neural community

An ANN is a computational methodology that mimics the useful conduct of a organic nerve cell by way of processing info by connecting the inputs and outputs in an organized side30. The construction of a typical ANN consists of neurons (processing models), connection weights, biases, and a number of layers. Standard ANNs embody a number of hidden layers, with neurons in every layer totally related to each neuron within the subsequent layer. This structure permits for the task of distinct weights and biases that facilitate the inter-neuron connections. ANNs supply numerous benefits over different models on account of their robustness in decoding complicated, nonlinear data with excessive levels of fluctuation.

A multilayer perceptron (MLP) was utilized within the current research to foretell drought. An MLP is a feed-forward ANN that maps a sequence of inputs onto a set of applicable outputs. The enter data is fed into the enter layer and progresses by means of the community, transferring to all related neurons in subsequent layers. An MLP employs backpropagation alongside the stochastic gradient descent optimization algorithm to coach the community. Throughout this course of, the weights are adjusted iteratively till the community predicted values intently align with the precise noticed values. On this research, an MLP with 300, 150, and 150 neurons within the enter, output, and center layers, respectively, was carried out. The worth of every neuron within the hidden layer is set by the weighted sum of its inputs, which is articulated within the following equation.

$${z}_{j}= sum_{i=0}^{n}{w}_{ij}{x}_{i}-{b}_{j}$$

(11)

the place zj is the weighted sum, n is the variety of inputs, wij is the load of the enter xi, and bj is the bias worth for the jth neuron. The deployed mannequin used a continuing studying price of 0.001 and a stochastic gradient-based optimizer in tuning the squared loss31.

Deep studying

A DL mannequin is taken into account a generalized type of a giant neural community. It applies backpropagation to coach a multilayer feedforward ANN by way of a stochastic gradient descent optimization algorithm. Its community can embody a number of hidden layers comprised of neurons with tanh, rectifier, and maxout activation capabilities. DL provides numerous superior attributes, significantly momentum coaching, an adaptive studying price, and a number of regularization strategies, which improve the forecasting accuracy of this method. Every computational node trains the worldwide mannequin parameters on its native data with a number of asynchronous threads. Furthermore, they add to the worldwide mannequin on a daily foundation by performing mannequin averaging all through the community. Extra info on the event and utility of DL models will be present in32.

Random forest

An RF consists of a large number of resolution bushes, every constructed by becoming random subsets of the dataset, and the collective output of those bushes determines the ultimate consequence. This methodology’s inherent randomness in function choice aids in stopping the mannequin from overfitting. RFs are sometimes chosen for ensemble modeling on account of their proficiency in managing nonlinear data. They’re able to tackling giant datasets with 1000’s of enter variables and are versatile sufficient to handle each regression and classification challenges. Nonetheless, RF models contain intricate computations and might not yield exact predictions when coping with data containing excessive values. The development of a tree inside the forest entails the random number of data samples and the task of a selected variety of options and observations to coach the forest, permitting the bushes to develop to their most depth.

Drought indicators

Drought indicators are pivotal for the worldwide evaluation and administration of drought situations. The Australian Water Assets Evaluation mannequin, a joint initiative by the BoM and the Commonwealth Scientific and Industrial Analysis Group, has developed a number of drought indicators based on numerous climatic data33. Soil moisture data had been utilized on this research to evaluate the effectiveness of drought indices, as they’re used to infer drought occasions as early as potential34. The drought indicators utilized on this research: (1) DI1: deep soil moisture, (2) DI2: decrease soil moisture, (3) DI3: root zone soil moisture, (4) DI4: higher soil moisture, and (5) DI5: runoff, which gives a modeled estimate from a small, undisturbed watershed.

Analysis standards

The analysis standards adopted on this research had been based on the Pearson correlation, the RMSE, and R2. The Pearson correlation coefficient is taken into account a statistical device that determines the linear relationship between two parameters35. It was utilized in a number of purposes, e.g., data evaluation, noise discount, and time-delay estimation. Pearson correlation was used to match the outcomes of the standard models with the drought indicators and validate the outputs of the comfortable computing models.

$$PCC=frac{nsum {x}_{i}{y}_{i}-sum {x}_{i}sum {y}_{i}}{sqrt{nsum {x}_{i}^{2}-{(sum {x}_{i})}^{2}}sqrt{nsum {y}_{i}^{2}-{(sum {y}_{i})}^{2}}}$$

(12)

the place PCC is the Pearson correlation coefficient, n is the variety of data factors, i is the data level ranges from 1 to n, ({x}_{i}) is the ith worth of variable x, and ({y}_{i}) is the ith worth of variable y. As well as, the RMSE and R2 had been the standards utilized to evaluate the efficiency of the models. The previous is delicate to prediction errors, significantly for peak data values, serving as a measure of the models’ accuracy, whereas the latter is indicative of the diploma of settlement between the expected and noticed values36.