Magnetic resonance processing with artificial intelligence (MR-Ai)

MR-Ai Structure for 2D sample – Echo (or Anti-Echo) reconstruction

In our earlier work, we demonstrated the effectiveness of the WaveNet-based NMR Community (WNN) in capturing common 1D patterns in 2D NMR spectra, together with level unfold perform (PSF) patterns in non-uniformly sampled (NUS) spectra and peak multiplets ensuing from scalar coupling9. For the Echo (or Anti-Echo) reconstruction, the place the phase-twist lineshape manifests as a 2D sample, we designed a 2D WNN structure to accommodate this function. Inside the MR-Ai framework for Echo (or Anti-Echo) reconstruction, the general community structure includes two main parts: WNNs and correction steps.

The structure of the MR-Ai for Echo (or Anti-Echo) reconstruction consists of 5 distinct WNNs (Fig. 4a). Though a single community produces a comparatively good spectrum, higher quantitative reconstructions typically require refinement by a number of iterations6,9. The WNNs are individually skilled in sequential order, with every using the output from the pre-trained upstream community as enter. The inputs and outputs for every WNN are normalized utilizing the Euclidean norm worth from the enter earlier than coaching. The preliminary spectrum ({{{{bf{S}}}}}_{{{{rm{Echo}}}}}in {{mathbb{R}}}^{2ktimes 2l}), containing sturdy phase-twist lineshape is given as enter to the primary WNN. Every consecutive WNN diminishes inherent artifacts and generates the spectrum ({widetilde{{{{bf{S}}}}}}^{i}in {{mathbb{R}}}^{2ktimes 2l}), which progressively aligns extra intently with the right spectrum. As enter to every subsequent WNNi (i = 1, 2, 3, 4), we computed the corrected spectrum, ({{{{bf{S}}}}}_{,{mbox{Cor}},}^{i}in {{mathbb{R}}}^{2ktimes 2l}):

$${{{{bf{S}}}}}_{{mbox{Cor}}}^{i} = {{{{bf{S}}}}}_{{{{rm{Echo}}}}}+C(i) cdot {widetilde{{{{bf{S}}}}}}_{{{mbox{Anti}}}-{{mbox{Echo}}}}^{i}quad , ;;;;;; {widetilde{{{{bf{S}}}}}}_{{{mbox{Anti}}}-{{mbox{Echo}}}}^{i} = FT[{Z}_{E}[iFT[{widetilde{{{{bf{S}}}}}}^{i}]]]$$

(2)

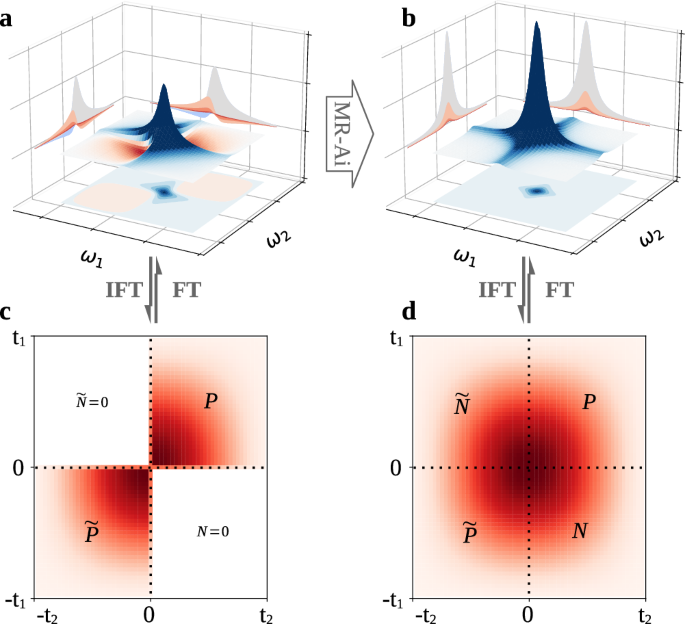

right here ({widetilde{{{{bf{S}}}}}}_{,{mbox{Anti-Echo}},}^{i}) represents the Anti-Echo a part of the anticipated spectrum (({widetilde{{{{bf{S}}}}}}^{i})) produced by zeroing (ZE) of the Echo half in its time area VE presentation28 (i.e. zeroing P and (widetilde{P}) in Fig. 1d). Word that the VE is the 2D advanced (({{mathbb{C}}}^{2ktimes 2l})) time-domain sign obtained by the inverse 2D Fourier rework of an actual half (({{mathbb{R}}}^{2ktimes 2l})) of the spectrum. At step i, C(i) = 1 − 0.05 × 21−i is an actual scalar step-dependant issue. In different phrases, the correction makes use of clear separation of the Echo and Anti-Echo components within the VE time area presentation of the spectrum and barely attenuates the anticipated Anti-Echo half ({widetilde{{{{bf{S}}}}}}_{,{mbox{Anti-Echo}},}^{i}) whereas restoring the Echo half to the enter values SEcho. The correction will not be utilized after the ultimate step. We discovered that the correction considerably will increase high quality of the reconstructed spectrum.

The WNN structure employed in MR-Ai was impressed by the WaveNet structure, initially designed for analyzing lengthy audio alerts within the time area40. Just like WaveNet, WNN makes use of dilated convolutional layers that skip a specified variety of information factors and thus might be seen as convolutional layers with gaps. By assigning numerous dilation sizes to completely different convolutional layers, it’s attainable to construct a block that behaves like a convolutional layer with an in depth kernel dimension. On this structure, aside from the primary layer the place a 2 × 4 kernel dimension with 50 filters is utilized with none dilation fee, subsequent layers, such because the mth layer, make use of a (2m−1, 2m) dilation fee alongside with a 2 × 2 kernel dimension with 50 filters. The WNN consists of 5 layers using Rectified Linear Unit (ReLU) activation capabilities41 with no padding between the layers (Fig. 4b). On this configuration, the output layer’s dimensions are 32 × 64, which, as a result of particular layers chosen and the absence of padding, corresponds to the enter layer dimension of 63 × 127. We generated the community graphs utilizing TensorFlow python package deal42 with the Keras frontend. The coaching was carried out inside TensorFlow utilizing the stochastic ADAM optimizer43 with the default parameters and 0.0001 studying fee, Imply Sq. Error loss perform, mini-batch dimension equal to 64, and the variety of epochs equal to 1000 or much less when a monitored metric has stopped bettering on the validation information set (see Supplementary Strategies 1.1 for Python Code).

We skilled WNNs on the NMRbox server44 (128 cores 2 TB reminiscence), outfitted with 4 NVIDIA A100 TENSOR CORE GPU graphics playing cards. The ultimate coaching and cross-validation losses for all 5 WNNs used for Echo reconstruction are proven in Fig. 4c.

Uncertainty estimation for predictions utilizing MR-Ai

On this examine, we utilized MR-Ai to foretell the uncertainty of intensities at each level of the spectrum produced by a given reconstruction methodology. A number of strategies for estimating uncertainty by DNN can be found34. Because of the light-weight nature of the WNN, the small variety of coaching parameters, and the quick coaching course of, it was attainable to undertake a Gaussian combination model-based method for the uncertainty estimation36. We achieved this by together with a Gaussian distribution layer inside TensorFlow (TFP Probabilistic Layers) into the WNN and using the unfavorable log-likelihood (NLL) because the loss perform (Eq. (1)). The WNN makes use of the reconstructed spectrum intensities because the fastened technique of Gaussian distributions (μ) and determines σ because the corresponding uncertainty primarily based on enter information (Fig. 3). To make sure that the estimated uncertainty (σ) is persistently constructive and non-zero, we utilized a ReLU activation perform previous to the Gaussian distribution layer with normal regularization elements in TensorFlow (see Supplementary Strategies 1.2 for Python Code). Right here, we skilled three MR-Ai fashions for uncertainty estimation, particularly for MR-Ai Echo (or Anti-Echo), CS Echo (or Anti-Echo), and CS NUS. The ultimate coaching and cross-validation losses for these networks are proven in Fig. 5.

Artificial information for coaching

For coaching the DNNs we used artificial information. The 2D NMR hyper-complex time area sign ({{{{bf{X}}}}}_{{{{rm{FID}}}}}in {{mathbb{H}}}^{ktimes l}), known as the Free Induction Decay (FID), is represented as a mix of P − and N − advanced alerts ({{{{bf{X}}}}}_{{{{rm{P}}}}}in {{mathbb{C}}}^{ktimes l}) and ({{{{bf{X}}}}}_{{{{rm{N}}}}}in {{mathbb{C}}}^{ktimes l}), respectively:

$${{{{bf{X}}}}}_{{{{rm{P/N}}}}}({t}_{1},{t}_{2})={sum}_{n}{A}_{n}({e}^{pm {{{bf{i}}}}(2pi {omega }_{{1}_{n}}{t}_{1}+{phi }_{{1}_{n}})}{e}^{{t}_{1}/{tau }_{{1}_{n}}})({e}^{{{{bf{i}}}}(2pi {omega }_{{2}_{n}}{t}_{2}+{phi }_{{2}_{n}})}{e}^{-{t}_{2}/{tau }_{{2}_{n}}})+{{{bf{noise}}}}$$

(3)

the place sum is over N exponentials, and the nth exponential has the amplitudes An, phases ({phi }_{{1}_{n}}) and ({phi }_{{2}_{n}}), leisure instances ({tau }_{{1}_{n}}) and ({tau }_{{2}_{n}}), and frequencies ({omega }_{{1}_{n}}) and ({omega }_{{2}_{n}}) within the oblique and direct dimensions, respectively. The evolution instances t1 and t2 are given by the collection listed 0, 1, …, T-1, the place T = okay, l is the variety of advanced factors in every dimension. XP and XN components are outlined by + and − indicators earlier than the imaginary unit within the first exponent, respectively. The specified variety of completely different FIDs for the coaching set is simulated by randomly various the above parameters within the ranges summarized in Desk 1. These parameters are consultant for 2D 1H-15N correlation spectra. Nevertheless, the DNN would require re-training for a spectrum, the place the sign options are exterior of the ranges within the desk, for instance, having bigger section distortions or wider unfold of peak intensities as could also be noticed in NOESY spectra or metabolomics information. The very excessive dynamic vary of the sign intensities might even require adjustments within the DNN structure.

We added Gaussian noise to emulate the noise current in real looking NMR spectra. Subsequently, normal 2D sign processing by the Python package deal nmrglue45, together with apodization, zero-filling, FT, and section correction, are employed to acquire pure absorption mode spectra S (Fig. 1b) from the hypercomplex XFID.

The Digital Echo28 presentation (VE), which is a simple methodology for producing Echo (or Anti-Echo) spectra with a phase-twist lineshape, is obtained by inverse 2D Fourier Remodel (IFT) of the true a part of the spectrum S (Fig. 1d). The VE illustration is actually the mix of P- and N-type advanced time area information. The Echo spectrum SEcho (Fig. 1a) is obtained by zeroing N-type a part of the info (i.e. N and (widetilde{N}) areas in Fig. 1c) adopted by 2D FT. Equally, the Anti-Echo SAnti-Echo spectrum is obtained by zeroing the P-type information. On this work, we synthesized 1024 [SEcho, S] datasets for coaching MR-Ai Echo (or Anti-Echo) reconstruction primarily based on Desk 1.

To coach the MR-Ai for predicting uncertainties within the spectra reconstructed by numerous strategies, the coaching dataset contains the enter information, the reconstruction outcome obtained from the strategy, and reference floor truce spectra S. In a manufacturing run, the skilled MR-Ai can estimate the uncertainty of the reconstruction generated by the strategy utilizing solely the enter information, e.g., the twisted Echo spectrum or NUS spectrum with aliasing artifacts (Desk 2, Fig. 3). Right here, we used three coaching datasets primarily based on Desk 3 to coach three MR-Ai for uncertainty estimation for MR-Ai Echo (or Anti-Echo), CS Echo (or Anti-Echo), and CS NUS.

For the coaching, a number of artificial NUS spectra SNUS are generated with the identical 50% NUS schedule of the Poisson-gap kind46 sampling from uniformly sampled spectra S.

Use of experimental information for MR-Ai testing

To check CS and skilled MR-Ai performances, we used beforehand described 2D spectra for a number of proteins: U — 15N-13C — labeled ubiquitin (8.6 kDa)47, U — 15N-13C — labeled Cu(I) azurin (14 kDa)26, U — 15N-13C-2H methyl ILV back-protonated MALT1 (44 kDa)48, and U — 15N-13C-2H Tau (46 kDa, IDP)27. The absolutely sampled two-dimensional experiments used on this examine are described in Desk 2. We used NMRPipe49, mddnmr50, and Python package deal nmrglue45 for studying, writing, and traditional processing of the NMR spectra.

Processing with compressed sensing

We utilized the Compressed Sensing Iterative Comfortable Thresholding algorithm (CS-IST) mixed with VE, much like latest variations of the CS module within the mddnmr software program28,29. The identical algorithm was used for NUS reconstruction with 50% fastened Poisson Hole random sampling NUS spectra.

High quality metrics for the reconstructed spectra

We accessed the standard of the experimental spectra obtained utilizing three reconstruction strategies: MR-Ai for Echo (Anti-Echo) information; CS for Echo (Anti-Echo) information; CS for time equal 50% NUS.

Traditional reference-based analysis metrics

To guage the similarity between reconstructed and full reference spectra, we utilized two traditional reference-based analysis metrics: the root-mean-square deviation (RMSD) and the correlation coefficient (({R}_{S}^{2})) between reconstruction and reference spectra. First, all spectra have been normalized primarily based on their maximal peak depth. The RMSD and ({R}_{S}^{2}) have been calculated just for spectral factors with intensities above 1% of the best peak depth in both of the reconstruction or reference spectra, aiming to restrict the potential results of the baseline noise on the standard metrics. This method ensures that each metrics are delicate to false-positive and false-negative spectral artifacts, whereas the factors with very low close to/beneath noise intensities are ignored. It was demonstrated that the outcomes from the RMSD and ({R}_{2}^{s}) metrics intently correspond to the outcomes obtained utilizing the NUScon metrics9,31. Supplementary Fig. 9 shows the comparability between completely different reconstruction strategies on 4 experimental spectra of various proteins with various complexities.

Predicted reference-free spectrum high quality rating (pSQ)

depends on the anticipated uncertainties for every level on the spectrum. Initially, all predicted uncertainties for every level have been normalized primarily based on the maximal peak depth within the reconstructed spectrum. By evaluating the anticipated uncertainties just for spectral factors with intensities above 1% of the best peak depth within the reconstruction, we will consider the efficiency of reconstruction throughout completely different strategies (Supplementary Fig. 10).

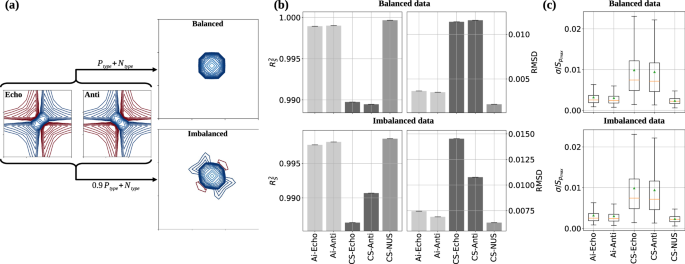

Balanced and imbalanced P- and N-type artificial check information

The artificial testing information is used to simulate two distinct situations Fig. 6. Within the first case, P- and N-type information components have been amplitude-balanced and consequently, produced a perfect peak line form. Within the second state of affairs, imbalanced within the amplitude of P- and N-type information (on this case, P-type is 10% smaller than N-type) resulted in an imperfect peak within the historically processed reference spectrum with a visual slight residual section twisted line form. This line imperfection distorts the reference-based metric by favoring the reconstruction of the corresponding Echo (or Anti-Echo) spectrum whatever the used methodology MR-Ai or CS (Fig. 6b). The pSQ metric (Fig. 6c) doesn’t use the distorted reference spectrum and thus exhibits anticipated equal high quality for the Echo and Anti-Echo spectra reconstructed by MR-Ai and CS.

a The consequences of balanced and imbalanced P- and N-type information on outcomes in regular phase-modulated information processing. MR-Ai and CS reconstruction efficiency underneath situations of balanced and imbalanced P- and N-type artificial information through the use of (b) Traditional reference-based analysis metrics and (c) Predicted reference-free high quality metric proven as a field plot of normalized spectra uncertainties. The orange bar and the inexperienced triangle point out the median and imply of the uncertainty distribution, respectively.