Around the globe, governments are grappling with how finest to handle the more and more unruly beast that is artificial intelligence (AI).

This fast-growing expertise guarantees to spice up nationwide economies and make finishing menial duties simpler. However it additionally poses severe dangers, akin to AI-enabled crime and fraud, elevated unfold of misinformation and disinformation, elevated public surveillance and additional discrimination of already deprived teams.

The European Union has taken a world-leading function in addressing these dangers. In current weeks, its Artificial Intelligence Act got here into power.

This is the primary law internationally designed to comprehensively handle AI dangers – and Australia and different countries can learn a lot from it as they too attempt to make sure AI is protected and helpful for everybody.

AI: a double edged sword

AI is already widespread in human society. It is the premise of the algorithms that suggest music, movies and tv exhibits on functions akin to Spotify or Netflix. It is in cameras that determine folks in airports and purchasing malls. And it is more and more used in hiring, schooling and healthcare providers.

However AI is additionally getting used for extra troubling functions. It can create deepfake photos and movies, facilitate on-line scams, gas huge surveillance and violate our privateness and human rights.

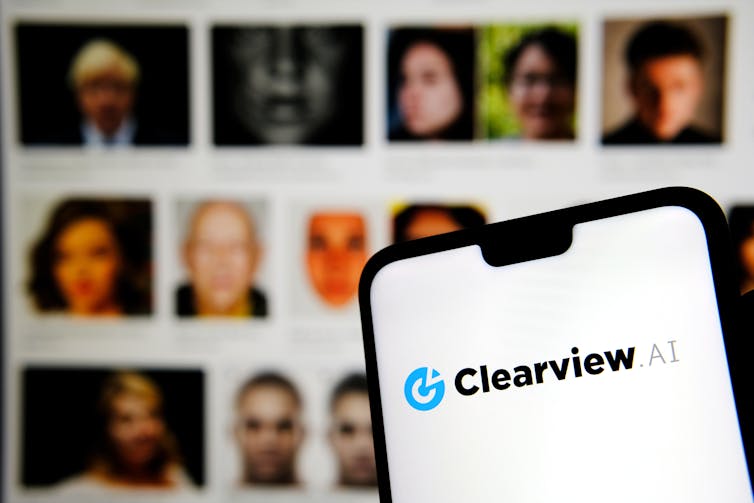

For instance, in November 2021 the Australian Info and Privateness Commissioner, Angelene Falk, ruled a facial recognition tool, Clearview AI, breached privateness legal guidelines by scraping peoples pictures from social media websites for coaching functions. Nonetheless, a Crikey investigation earlier this 12 months discovered the corporate is nonetheless gathering pictures of Australians for its AI database.

Circumstances akin to this underscore the pressing want for higher regulation of AI applied sciences. Certainly, AI builders have even referred to as for legal guidelines to assist handle AI dangers.

Ascannio/Shutterstock

The EU Artificial Intelligence Act

The European Union’s new AI law got here into power on August 1.

Crucially, it units necessities for various AI techniques based mostly on the extent of threat they pose. The extra threat an AI system poses for well being, security or human rights of individuals, the stronger necessities it has to fulfill.

The act incorporates a listing of prohibited high-risk techniques. This checklist consists of AI techniques that use subliminal strategies to govern particular person choices. It additionally consists of unrestricted and real-life facial recognition techniques utilized by by law enforcement authorities, similar to those currently used in China.

Other AI techniques, akin to these utilized by authorities authorities or in schooling and healthcare, are additionally thought of excessive threat. Though these aren’t prohibited, they have to adjust to many necessities.

For instance, these techniques will need to have their very own threat administration plan, be skilled on high quality knowledge, meet accuracy, robustness and cybersecurity necessities and guarantee a sure stage of human oversight.

Decrease threat AI techniques, akin to numerous chatbots, have to adjust to solely sure transparency necessities. For instance, people have to be instructed they’re interacting with an AI bot and never an precise individual. AI-generated photos and textual content additionally have to comprise a proof they’re generated by AI, and never by a human.

Designated EU and nationwide authorities will monitor whether or not AI techniques used in the EU market adjust to these necessities and can difficulty fines for non-compliance.

Other countries are following go well with

The EU is not alone in taking motion to tame the AI revolution.

Earlier this 12 months the Council of Europe, a global human rights organisation with 46 member states, adopted the first international treaty requiring AI to respect human rights, democracy and the rule of law.

Canada is additionally discussing the AI and Data Bill. Just like the EU legal guidelines, this can set guidelines to numerous AI techniques, relying on their dangers.

As a substitute of a single law, the US authorities just lately proposed a variety of completely different legal guidelines addressing completely different AI techniques in numerous sectors.

Australia can learn – and lead

In Australia, persons are deeply concerned about AI, and steps are being taken to place mandatory guardrails on the brand new expertise.

Final 12 months, the federal authorities ran a public session on safe and responsible AI in Australia. It then established an AI expert group which is presently engaged on the primary proposed laws on AI.

The federal government additionally plans to reform legal guidelines to deal with AI challenges in healthcare, client safety and artistic industries.

The chance-based method to AI regulation, utilized by the EU and different countries, is begin when fascinated about methods to regulate various AI applied sciences.

Nonetheless, a single law on AI won’t ever be capable of deal with the complexities of the expertise in particular industries. For instance, AI use in healthcare will elevate advanced moral and authorized points that can must be addressed in specialised healthcare legal guidelines. A generic AI Act is not going to suffice.

Regulating various AI functions in numerous sectors is not a simple job, and there is nonetheless an extended option to go earlier than all countries have complete and enforceable legal guidelines in place. Policymakers should be a part of forces with trade and communities round Australia to make sure AI brings the promised advantages to the Australian society – with out the harms.