AI applied sciences, notably CV, are more and more utilized in minimally invasive surgery, with prior purposes targeted on figuring out surgical devices, phases, and anatomical buildings to reduce dangers and improve ability evaluation23. Nonetheless, restricted analysis has targeted on intraoperative prognosis and therapy decision-making, notably for GC sufferers with IAM30,31. Correct LE is as essential as secure surgical resection for the therapy and prognosis of GC sufferers. But, surgeon expertise should still result in misjudgments and omissions of IAM lesions on this part. This examine is the primary to use the real-time semantic segmentation algorithm during the LE part of GC surgery, attaining passable recognition and segmentation of IAM lesions with a Cube rating of 0.76, an IOU of 0.61, and an inference velocity of 11 fps. Cube rating, IOU, recall, specificity, accuracy, and precision are the frequent metrics used for evaluating mannequin efficiency in segmentation duties. To additional examine mannequin efficiency comprehensively, we utilized mAP@50 to guage the mannequin’s capacity to precisely phase and distinguish totally different recognition object lessons inside the IAM dataset. Moreover, we employed SSIM and HD to evaluate structural similarity and level set similarity between predictions and floor reality to guage mannequin efficiency. AiLES, primarily based on RF-Internet, outperformed SAM, MSA, and DeeplabV3+ throughout these metrics. RF-Internet was initially developed utilizing an ultrasound breast cancer dataset much like our IAM dataset, making it extra appropriate for the segmentation activity in our examine. Notably, AiLES achieved Cube scores of 0.90, 0.87, and 0.80 for the generally neglected single peritoneal lesions, tiny lesions, and mesenteric lesions, respectively. The mannequin matches the accuracy of novice surgeons in segmenting IAM lesions, whereas additionally successfully recognizing lesions that novice surgeons failed to acknowledge (Figs. 4 and 5).

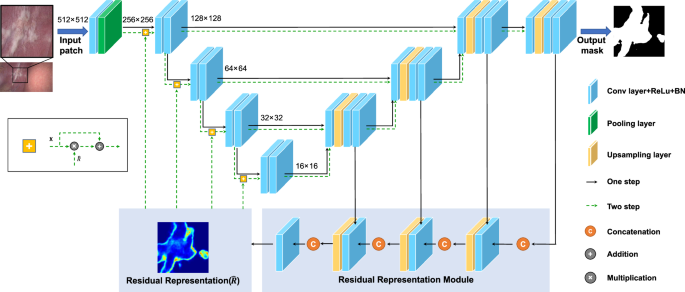

For AI research aimed toward medical purposes, it’s most vital to decide on the suitable mannequin. Our findings indicated that, in comparison with different fashions, the AiLES primarily based on residual suggestions community (RF-Internet) mannequin carried out greatest in figuring out IAM lesions, attaining a Cube rating of 0.76. That is primarily as a result of RF-Internet was developed primarily based on the breast cancer ultrasound pictures, which had been much like the IAM lesions in our examine, exhibiting vital variability in form, dimension, and extent (Supplementary Fig. 2)32. AiLES employed RF-Internet’s core module, the residual illustration module, to study the ambiguous boundaries and sophisticated areas of lesions, thereby enhancing segmentation efficiency (Fig. 6). The DeeplabV3+ mannequin is extensively utilized in surgical AI research for recognizing anatomical buildings and surgical devices33,34. Nonetheless, it solely achieved a Cube rating of 0.67 in our examine, which was decrease than the passable efficiency (Cube rating ≥ 0.75) reported in earlier research for anatomy and instrument segmentation33,34. That is primarily because of the irregular shapes, areas, and extent of the IAM lesions, that are considerably totally different from extra common anatomical buildings and surgical devices. Moreover, SAM is the primary common picture segmentation basis mannequin35, which was launched during the design part of our examine. Nonetheless, it had not been totally explored in segmenting medical pictures36, notably surgical pictures, and demonstrated poor efficiency in numerous segmentation modes together with automated, one-point, and one-box for IAM lesions, with Cube scores of 0.14, 0.02, and 0.29, respectively. We thought the distinctive traits of IAM lesion pictures had been considerably totally different from pure pictures, resulting in the unsatisfactory efficiency of SAM which was skilled by pure pictures35. After fine-tuning with the MSA skilled on our dataset37, the MSA demonstrated a notable enchancment over the unique SAM in segmenting IAM, with a Cube rating of 0.63. Though the SAM and MSA had been unspecific for our examine, we expect our outcomes present useful knowledge assist and references for making use of SAM in surgical pictures. Because the IAM dataset was authentic and distinctive, there have been no accessible research or algorithms for references and no gold normal mannequin for our dataset. Based mostly on the above components, we included and evaluated the present mainstream AI fashions for IAM segmentation, providing a reference workflow for future analysis on surgical picture segmentation. Furthermore, the analysis of totally different fashions for real-time intraoperative deployment confirmed inference speeds of 2 fps for SAM and MSA, 7 fps for DeeplabV3+, and 11 fps for AiLES. In response to earlier surgical AI research, inference speeds for surgical picture segmentation usually vary from 6 fps to 14 fps33,38,39, indicating that the inference velocity of AiLES in our examine is relevant and acceptable. Sooner or later, the real-time inference velocity of AiLES might be additional improved by using deep studying acceleration strategies comparable to TensorRT33.

ResNet-34 is adopted because the Encoder path with pre-trained parameters. This community consists of two steps: Step one (black arrows) is used to generate the preliminary segmentation outcomes and study the residual illustration of lacking/ambiguous boundaries and complicated areas. The second step is used to (inexperienced dotted arrows) feed the residual illustration into the encoder path and generate extra exact segmentation outcomes. AiLES: synthetic intelligence laparoscopic exploration system. IAM: intra-abdominal metastasis.

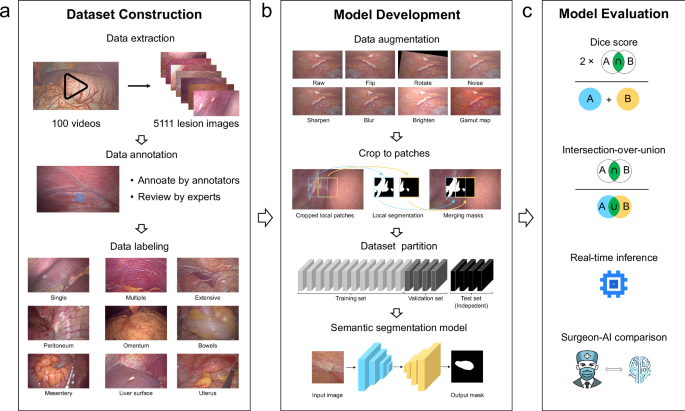

The medical practicability of the AI mannequin relies upon not solely on the mannequin efficiency, but additionally on the suitable annotation strategy for setting up a high-quality dataset40. Presently, there are a number of frequent annotation approaches for laptop imaginative and prescient picture evaluation, together with level, bounding field, and polygon. Our examine focuses on recognizing and segmenting IAM lesions, which have irregular shapes, extents, and limits. For IAM lesion annotation, the purpose strategy solely marks the principle location of the lesion and fails to seize ample visible options, particularly for intensive lesions; the bounding-box strategy could embody partial regular areas, which can trigger the mannequin to misidentify regular tissues as lesions, growing false positives and resulting in inaccurate outcomes. In distinction, the polygon strategy can precisely define the boundaries of lesions, regardless of their form and extent, much like how surgeons acknowledge lesions (Supplementary Fig. 3). Due to this fact, we chosen the polygon strategy for annotating IAM lesions. Lastly, we constructed a high-quality IAM dataset with 5111 frames from 100 LE movies, with an analogous video-to-frame ratio to that of a number of glorious surgical AI research that used the identical annotation strategy as ours (Supplementary Desk 4)25,34,41,42,43.

In medical apply, peritoneal metastasis is the predominant sort of IAM in GC sufferers. Whereas a number of and intensive peritoneal metastases are readily identifiable, single peritoneal metastatic lesions could also be vulnerable to being neglected as a result of their tiny dimension, occult location, or speedy motion of the digicam during LE31. The JCOG0501 trial confirmed that the incidence of omitted IAM lesions within the omentum and mesentery ranges between 1.9% and 5.1%, not lower than the 5.1% incidence of peritoneal metastases44. Additionally, research have proven a ten.6–20.0% likelihood of lacking IAM lesions during LE45,46, which may probably result in the under-staging of stage IV GC sufferers. As well as, the REGATTA trial means that preliminary gastrectomy might not be an applicable therapy choice for sufferers with IAM as a result of of poor prognosis47. Due to this fact, sufferers would possibly obtain inappropriate gastrectomy because of the underestimation of the tumor stage brought on by IAM omission, severely affecting their survival and prognosis. The AiLES excels in segmenting single peritoneal lesions (Cube rating 0.90), tiny lesions (Cube rating 0.87), and mesenteric lesions (Cube rating 0.80), and exhibits strong efficiency throughout totally different areas (Cube scores 0.62–0.93), successfully figuring out and segmenting lesions usually missed by people, thereby considerably enhancing the accuracy of tumor staging (Supplementary Film 1).

Individualized conversion remedy is essential for stage IV GC sufferers, who usually exhibit tumor heterogeneity and sophisticated metastases48. The calculation of the peritoneal cancer index (PCI) utilizing LE presents a complete analysis of the extent and sample of tumor dissemination within the stomach cavity20, serving as important info for figuring out an optimum individualized therapy technique for stage IV GC sufferers. Present analysis and medical pointers advocate for the incorporation of PCI scores into therapy decision-making processes: sufferers with low PCI scores are advisable to obtain CRS and HIPEC for potential survival advantages49; sufferers with excessive PCI scores are thought-about to obtain systemic chemotherapy to handle signs and enhance high quality of life50. All of the above analysis outcomes verify the significance of correct PCI evaluation. It’s well-known that the PCI evaluation should consider the metastatic situations on the parietal peritoneum, visceral peritoneum, and the surfaces of varied organs. Not too long ago, Thomas et al. developed a computer-assisted staging laparoscopy (CASL) system that employs object detection and classification algorithms to detect and distinguish between benign and malignant oligometastatic lesions31. The CASL is at the moment restricted to analyzing static pictures of single lesions within the peritoneum and liver floor. In distinction, our AiLES may acknowledge the IAM lesions with totally different metastatic extents (single, a number of, and intensive) and metastatic areas (peritoneum, omentum, bowels, mesentery, liver floor, and uterus) in real-time (Supplementary Film 1). Consequently, the AiLES may function a useful complement to the CASL system, compensating for limitations and illustrating the super potential of AI algorithms to help intraoperative PCI evaluation for GC sufferers. Nonetheless, our AiLES shouldn’t be but succesful of recognizing particular organs or stomach areas the place IAM lesions are positioned, nor can it measure lesion dimension during surgery, that are additionally elementary components for AI-based automated PCI evaluation. AI for IAM recognition and automated PCI evaluation are usually not solely totally different medical research, but additionally totally different CV duties. It must be emphasised that, IAM recognition in our examine is a crucial basis and first step towards attaining automated PCI evaluation sooner or later.

Within the medical purposes of AI, researchers are primarily involved with how AI compares to the proficiency of skilled physicians23. In research involving object detection and classification algorithms, AI and doctor efficiency may be evaluated by easy ‘quick/gradual’ and ‘sure/no’ evaluations25,31. Nonetheless, these analysis strategies fall inadequate for semantic segmentation duties33, the place efficiency variations between people and AI are much less quantifiable. To handle this, we utilized two approaches to evaluate the comparability between surgeons and AI. Firstly, utilizing expert-annotated segmentations as the bottom reality, we assessed the segmentation efficiency of AI and novice surgeons on metastatic lesions with various extents and areas, discovering no vital distinction. This means that AI performs at a degree similar to that of novice surgeons in lesion identification. Subsequently, we chosen pictures of tiny, occult lesions for identification by AI and a novice surgeon. In a number of instances, AI efficiently recognized metastatic lesions missed by the novice surgeon. Since we used the IAM annotations reviewed by knowledgeable surgeons because the gold normal for mannequin coaching and growth, the segmentation efficiency of AiLES can’t surpass that of the knowledgeable surgeons, that’s the explanation why we didn’t examine AiLES with consultants. Moreover, LE is usually carried out by novice surgeons below the supervision of senior surgeons, and novice surgeons could lack expertise in recognizing IAM and omitting occult lesions in medical apply. Due to this fact, there could also be ample medical curiosity and demand to develop an AI system to help novice surgeons in recognizing IAM lesions. As anticipated, the surgeon-AI comparability demonstrated that AiLES had comparable efficiency to novice surgeons and outperformed them within the recognition of tiny and simply neglected lesions. The help of AiLES is akin to collaborative judgment and decision-making between two surgeons, thereby shortening the educational curve for recognizing IAM and lowering the omission danger.

LE is advisable by a number of worldwide pointers for superior GC in medical apply7,15,16, which means that AiLES holds vital promise for IAM recognition during precise surgical procedures. Since AiLES is developed primarily based on precise surgery movies, it may be built-in into laparoscopic units. Our plan is to show visible outcomes of AiLES recognition on a separate display screen, alongside the laparoscopic display screen51,52. This visible system will help surgeons in performing correct intraoperative tumor staging, avoiding pointless gastrectomy, and lowering associated healthcare prices, with out altering their established surgical routines. The AiLES has not solely potential medical purposes in workflow, but additionally sensible worth in surgical coaching for residents, fellows, and younger attending surgeons. Preoperatively, younger surgeons can observe each the unique and AI-assisted lesion visualization movies to reinforce their lesion recognition expertise, quickly enhancing their talents and reinforcing their reminiscence of varied lesion shapes and extents. Intraoperatively, AiLES can help in figuring out tiny, single, and occult IAM lesions and keep away from incorrect tumor staging. Postoperatively, AiLES permits younger surgeons to comprehensively evaluate lesion pictures from surgery movies, serving to them determine weaknesses and make focused enhancements. Lastly, it is very important emphasize that our AI mannequin for IAM recognition can help surgeons however can’t change surgeons in making therapy selections. Automated therapy decision-making is a distinct medical problem and multimodal AI activity. In surgical apply, even when AiLES’s efficiency improves sooner or later, ultimate intraoperative therapy selections must be made by surgeons.

This examine has a number of limitations. Firstly, the present dataset reveals an imbalance within the distribution of IAM lesions throughout totally different areas, such because the omentum, bowels, mesentery, and uterus. Particularly, the dataset didn’t embody frames of ovarian implantation metastasis and lacked ample frames of metastasis positioned on small and enormous bowels53. It’s essential to increase the IAM dataset with a extra balanced distribution of varied metastatic areas. Secondly, all movies in our dataset had been collected from GC surgical procedures, which can restrict the generalization of AiLES to different sorts of stomach tumor surgical procedures. To handle this problem, we are going to gather picture knowledge from surgery movies with IAM in numerous cancers (e.g., colorectal cancer, liver cancer, and pancreatic cancer). Thirdly, it’s a single-center retrospective examine, requiring additional multi-center and multi-device validation to strengthen the robustness and applicability of AiLES.

In conclusion, this examine successfully showcases the utilization of a real-time semantic segmentation AI mannequin during the LE part of laparoscopic GC surgery, resulting in an enhancement within the recognition of IAM lesions. The AiLES reveal comparable efficiency to novice surgeons and outperform them within the identification of tiny and simply neglected lesions, thereby probably lowering the chance of inappropriate therapy selections as a result of under-staging. The outcomes emphasize the importance of incorporating AI applied sciences inside surgery to reinforce correct tumor staging and help individualized therapy decision-making. Sooner or later, we are going to enhance our mannequin’s generalization and robustness by increasing the IAM dataset with complete metastatic areas from varied stomach cancer movies. Furthermore, it’s crucial to hold out multi-center and multi-device validation for the medical implementation of AiLES.