“In 2024, our funding in AI will deal with the appliance layer,” Wang stated within the earnings name. “Our advances in AI have enabled us to improve working effectivity throughout our present companies.”

“In 2024, our funding in AI will deal with the appliance layer,” Wang stated within the earnings name. “Our advances in AI have enabled us to improve working effectivity throughout our present companies.”

Large Language Fashions (LLMs) have made vital advances within the discipline of Information Extraction (IE). Information extraction is a job in Pure Language Processing (NLP) that entails figuring out and extracting particular items of knowledge from textual content. LLMs have demonstrated nice ends in IE, particularly when mixed with instruction tuning. By way of instruction tuning, these fashions are skilled to annotate textual content in accordance with predetermined requirements, which improves their means to generalize to new datasets. This means that even with unknown knowledge, individuals are in a position to do IE duties efficiently by following directions.

Nevertheless, even with these enhancements, LLMs nonetheless face many difficulties when working with low-resource languages. These languages lack each the unlabeled textual content required for pre-training and the labeled knowledge required for fine-tuning fashions. Because of this lack of knowledge, it’s difficult for LLMs to realize good efficiency in these languages.

To overcome this, a workforce of researchers from the Georgia Institute of Expertise has launched the TransFusion framework. In TransFusion, fashions are adjusted to operate with knowledge translated from low-resource languages into English. With this technique, the unique low-resource language textual content and its English translation present data that the fashions could use to create extra correct predictions.

This framework goals to successfully improve IE in low-resource languages by using exterior Machine Translation (MT) programs. There are three main steps concerned, that are as follows:

Increasing upon this construction, the workforce has additionally launched GoLLIE-TF, which is an instruction-tuned LLM that’s cross-lingual and tailor-made particularly for Web Explorer duties. GoLLIE-TF goals to cut back the efficiency disparity between high- and low-resource languages. The mixed purpose of the TransFusion framework and GoLLIE-TF is to extend LLMs’ effectivity when dealing with low-resource languages.

Experiments on twelve multilingual IE datasets, with a whole of fifty languages, have proven that GoLLIE-TF works effectively. Compared to the fundamental mannequin, the outcomes display that GoLLIE-TF performs higher zero-shot cross-lingual switch. Because of this with out additional coaching knowledge, it will possibly extra successfully apply its acquired abilities to new languages.

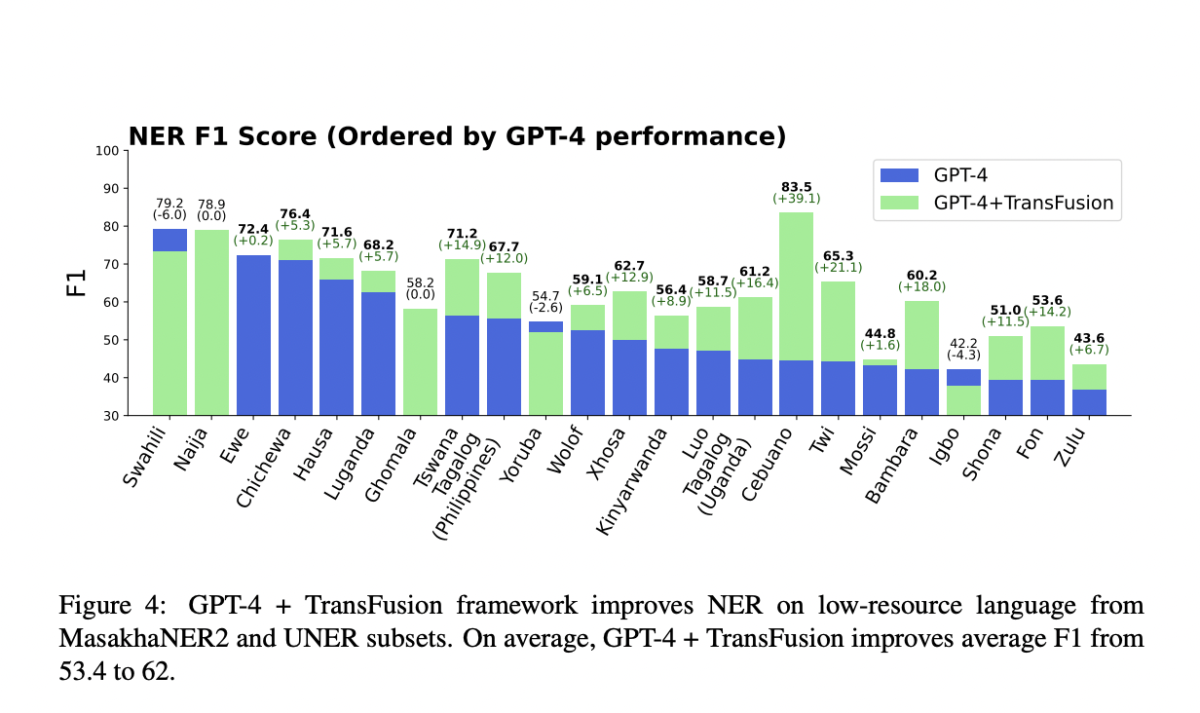

TransFusion utilized to proprietary fashions reminiscent of GPT-4 significantly improves the efficiency of low-resource language named entity recognition (NER). When prompting was used, GPT-4’s efficiency elevated by 5 F1 factors. Additional enhancements have been obtained by fine-tuning varied language mannequin varieties utilizing the TransFusion framework; decoder-only architectures improved by 14 F1 factors, whereas encoder-only designs improved by 13 F1 factors.

In conclusion, TransFusion and GoLLIE-TF collectively present a potent resolution for enhancing IE duties in low-resource languages. This reveals notable enhancements throughout many fashions and datasets, serving to to cut back the efficiency hole between high-resource and low-resource languages by using English translations and fine-tuning fashions to fuse annotations.

Try the Paper. All credit score for this analysis goes to the researchers of this undertaking. Additionally, don’t neglect to observe us on Twitter.

Be part of our Telegram Channel and LinkedIn Group.

In case you like our work, you’ll love our newsletter..

Don’t Neglect to affix our 45k+ ML SubReddit

Tanya Malhotra is a closing yr undergrad from the College of Petroleum & Vitality Research, Dehradun, pursuing BTech in Pc Science Engineering with a specialization in Artificial Intelligence and Machine Studying.

She is a Knowledge Science fanatic with good analytical and significant considering, together with an ardent curiosity in buying new abilities, main teams, and managing work in an organized method.

These two tech giants play a pioneering position within the AI house.

Artificial intelligence (AI) turned out to be a scorching investing development over the previous 12 months and a half. That is due largely to the massive quantity of capital being poured into this area of interest. Due to AI, corporations and governments will be capable of derive the massive financial good points that this expertise is anticipated to ship in the long term.

PwC estimates that AI adoption can increase world productiveness and contribute $15.7 trillion to the world economic system by 2030. Firms driving the AI increase have seen their inventory costs rise quickly up to now 18 months.

As an example, an funding of $10,000 in shares of Nvidia (NVDA -0.36%) originally of 2023 is now price about $85,080. The same funding in shares of Microsoft (MSFT -1.30%) is now price roughly $18,920. Each these “Magnificent Seven” shares play a pioneering position within the proliferation of AI, which is why they might turn into large winners over time.

Buyers trying to assemble a million-dollar portfolio would do nicely so as to add these tech titans to their holdings. Let’s take a look at the the reason why.

The roaring demand for AI chips has supercharged Nvidia’s progress up to now 12 months and a half. That is evident from the next chart:

(*2*)

NVDA Revenue (TTM) information by YCharts.

Nvidia’s backside line has grown at a a lot sooner tempo than its income. That is due to the unbelievable pricing energy it enjoys within the AI chip market. Its market share reportedly ranges between 70% and 95%, in keeping with Mizuho Securities. This dominant place is the explanation why Nvidia generates stunning profits on gross sales of its common H100 processors.

That development may proceed with the corporate’s new Blackwell architecture-based chips. Unbiased funding banking agency Raymond James estimates that it prices Nvidia $6,000 to fabricate every unit of its Blackwell B200 graphics processing unit (GPU). On condition that the corporate sells these processors for $30,000 to $40,000 every, its revenue margins are prone to stay fats sooner or later.

Even higher, the demand for Nvidia’s Blackwell processors is so robust that the corporate forecasts demand will exceed provide going into 2025. Nvidia’s foundry companion Taiwan Semiconductor Manufacturing is anticipated to extend its superior chip-packaging capability by 150% this 12 months and 70% in 2025 to assist the graphics specialist meet the sturdy demand for its new chips.

Extra importantly, analysts count on Nvidia to keep up its dominant place within the AI chip market. Analyst Beth Kindig of I/O Fund, a technology-focused analysis supplier, estimates that Nvidia’s addressable market in AI chips may hit a whopping $1 trillion in 2030, and the corporate is prone to seize a significant share of that market due to its strong moat.

In the meantime, Mizuho analyst Vijay Rakesh expects Nvidia’s information heart income to hit $280 billion in 2027. That will be an enormous enhance over the $47.5 billion in information heart income it generated within the earlier fiscal 12 months. It’s not stunning to see why analysts are so bullish about Nvidia’s AI chip dominance. The corporate workouts strong management over the AI chip supply chain, and it has been pushing the envelope on the product-development entrance to make sure that it brings new AI chips to the market sooner than earlier than.

So, even when Nvidia loses a few of its share however continues to stay the larger participant within the AI chip market, it ought to be capable of maintain its spectacular progress. Buyers trying to purchase an AI inventory that might assist develop their investments considerably and contribute to a million-dollar portfolio would do nicely to purchase Nvidia on condition that it sports activities a price-to-earnings-to-growth ratio (PEG ratio) of simply 0.09.

A PEG ratio of lower than 1 suggests {that a} inventory is undervalued for the expansion that it’s forecasted to ship, and Nvidia seems to be attractively valued on that entrance.

Nvidia’s chips are being bought by tech giants equivalent to Microsoft to energy their AI fashions. Because of this, Microsoft is accelerating its progress due to sturdy buyer demand for its varied AI options.

As an example, Microsoft’s cloud enterprise is gaining share due to the rising demand for its Azure OpenAI service. The corporate factors out that greater than 65% of Fortune 500 corporations use Azure OpenAI to energy AI purposes. Extra importantly, Microsoft stories a rise in common spending by Azure AI prospects.

On its April earnings conference call, CEO Satya Nadella identified that “The variety of $100 million-plus Azure offers elevated over 80% 12 months over 12 months, whereas the variety of $10 million-plus offers greater than doubled.”

AI contributed seven proportion factors of progress to Microsoft’s Azure cloud enterprise final quarter, a small acceleration over the six proportion factors of progress it drove within the quarter previous it. The enhancing momentum in new contracts means that Microsoft’s cloud enterprise is prone to get an even bigger AI increase sooner or later.

Extra importantly, the scale of the worldwide cloud AI market is forecasted to hit $396 billion in 2029, rising at an annual price of 38%. In the meantime, the general cloud-computing market may very well be price a whopping $1.44 trillion in 2029, in keeping with Mordor Intelligence.

So, there’s a entire new progress alternative for Microsoft to faucet because it generated $26.7 billion in income from the cloud enterprise final quarter, translating into an annual income run price of simply over $105 billion. With Microsoft gaining two proportion factors of market share 12 months over 12 months within the world cloud infrastructure market within the first quarter of 2024 and presently controlling 25% of this profitable house, it may win large from the AI-fueled progress within the cloud market if it continues to win extra market share.

Even higher, analysts challenge wholesome progress in Microsoft’s prime line within the present fiscal 12 months and past. It’s anticipated to complete the continuing fiscal 12 months with $245 billion in income, a rise of 15% over the earlier 12 months.

MSFT Revenue Estimates for Current Fiscal Year information by YCharts.

Microsoft’s progress estimates have been heading increased due to the rising traction of its AI enterprise. This development may proceed sooner or later as Microsoft’s cloud AI enterprise will get higher. Buyers trying so as to add a prime tech stock to their portfolios that might ship sturdy good points on the again of rising AI adoption would do nicely to purchase shares of Microsoft earlier than it jumps increased and provides to the spectacular good points it has clocked up to now 12 months and a half.