The sector of AI is so full of jargon that it may be very obscure what is admittedly taking place with every new growth. And the fact is that AI is all over the place, however not everybody understands what we’re speaking about once we confer with it.

To help you better understand what’s going on, The Verge has compiled a listing of some of the most typical AI phrases, and we’re bringing it to you translated and tailored to your language.

First, let’s outline what AI is (we talked about Agentic AI the different day) after which we are going to clarify every time period associated to artificial intelligence. Let’s go!

What’s AI precisely?

Typically abbreviated as AI, it’s technically the area of laptop science devoted to creating laptop programs that may suppose like a human being.

However these days there’s a lot of speak about AI as a expertise and whilst an entity, and it’s troublesome to pinpoint precisely what it means. It’s also steadily used as a buzzword in advertising and marketing, which makes its definition extra fluid than it needs to be.

Google, for instance, talks quite a bit about the way it has been investing in AI for years. This refers to what number of of its merchandise enhance because of artificial intelligence and the way the firm gives instruments like Gemini that appear clever, for instance.

There are underlying AI fashions that energy many AI instruments, comparable to OpenAI’s GPT. Then there may be Meta’s CEO, Mark Zuckerberg, who has used AI as a noun to confer with particular person chatbots.

As extra corporations attempt to promote AI as the subsequent huge factor, the methods they use the time period and different associated nomenclature can turn out to be much more complicated.

AI Terminology

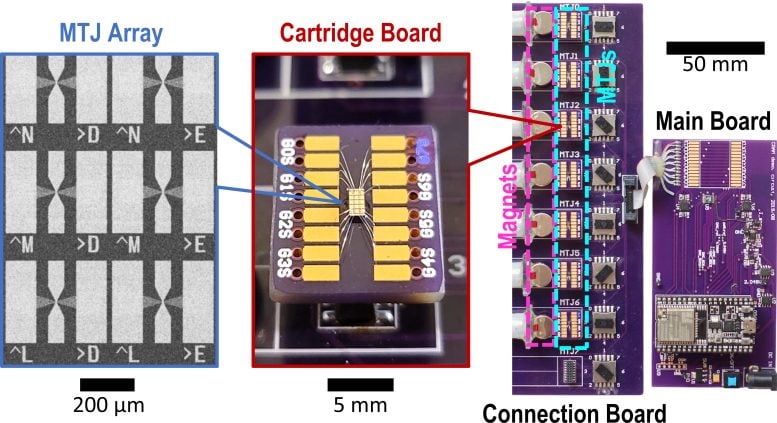

Machine Studying: Machine studying programs are skilled (we are going to clarify coaching later) with knowledge to allow them to make predictions about new data. This manner, they will ‘study.’ Machine studying is a area inside artificial intelligence and is key for a lot of AI applied sciences.

Artificial Common Intelligence (AGI): Artificial intelligence that’s as clever or extra clever than a human. OpenAI, specifically, is investing closely in AGI. It may very well be an extremely highly effective expertise, however for many individuals it’s also doubtlessly the most terrifying prospect about the prospects of AI: let’s take into consideration all the motion pictures we’ve seen about super-intelligent machines taking on the world! To make issues worse, work can be being completed on ‘superintelligence,’ that’s, on an AI that’s way more clever than a human.

Generative AI: AI expertise succesful of producing new texts, photos, codes, and way more. Suppose of all the fascinating (though generally problematic) responses and pictures you’ve gotten seen produced by ChatGPT or Google’s Gemini. Generative AI instruments work with AI fashions which are often skilled with giant quantities of knowledge.

Hallucinations: Since generative AI instruments are solely nearly as good as the knowledge they’ve been skilled on, they will confidently ‘hallucinate’ or invent what they consider to be the greatest solutions to questions. These hallucinations imply that programs could make factual errors or give incoherent solutions. There’s even some controversy over whether or not AI hallucinations will be ‘fastened.’

Bias: Hallucinations should not the solely issues which have arisen when coping with AI, and this might have been predicted – in spite of everything, AI is programmed by people. Consequently, relying on their coaching knowledge, AI instruments can exhibit biases.

AI Models: AI models are trained with data so they can perform tasks or make decisions on their own.

Large Language Models, or LLM: A type of AI model that can process and generate text in natural language. Claude, from Anthropic, is an example of an LLM, which is described as ‘a helpful, honest, and harmless assistant with a conversational tone.’